Neuron Safari: Visual Science in Virtual Reality

Last year we launched a collaboration with the team at Eyewire—A game to map the brain. Eyewire is a neuroscience project that started in the lab of Dr. Sebastian Seung at MIT, but has since spun off into a non-profit located in the Boston WeWork space. They have succeeded in crowdsourcing part of their research by turning it into a web-based game. Over 180,000 players from 150 countries work to trace the path of neurons through microscope image volumes, with help from some custom image-processing AI.

Eyewire has assembled a dedicated group of citizen scientists who compete to score points, form teams, and earn achievements. It’s one of the best examples of distributed gamification that I’ve come across. They are tapping into a bit of humanity’s vast cognitive surplus to help improve our understanding of the brain.

Their AI is actually pretty good at tracing neurons, but it can’t (yet) match the acuity of the human visual system in identifying subtle boundary features and image patterns. The players help guide the automation by accepting some of its findings, rejecting others, and clicking to suggest areas that the software missed. The following video shows how a neuron can be traced through an image volume slice-by-slice, then reconstructed into a solid 3D structure.

So where do we come in?

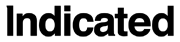

We approached Eyewire last year with an offer to turn their research data into an interactive VR experience for the Oculus Rift DK2. Creating visuals from research data, a.k.a. scientific visualization, is a core part of our work here at Indicated. In our VR experience, the viewer shrinks down to the nanoscale and flies through a field of 16 neurons that were discovered by players in EyeWire. As with most VR content, video capture doesn’t really do it justice. If you have a Rift, head over to Oculus Share and you can download it for yourself.

In this post we’ll dive into the approach we used to convert Eyewire research data into game engine assets and ultimately, an interactive VR experience. We developed this application in Unity and released it for Windows PCs. Fair warning, this is going to get a bit technical…

Source data

The image below is a tiny 2D cropped section from the source data. Individual neurons were encoded in the source data as a grayscale index value. To better illustrate the different index values, I have remapped them to a color gradient. The image shows that 6 individual neurons pass through this small section of the source data (can you spot the 6 different colors?).

The entire dataset was 2432 x 10496 x 6527 voxels, for a total of approx. 167 billion data points in the full 3D grid, although the majority of those were black “background” values. The dataset takes up roughly 650 GB when uncompressed. To understand how the data is grouped, imagine gathering together all of the “purple” voxels from the whole grid and throwing away the rest. After doing this you, would have one single complete neuron.

Sparse volume data

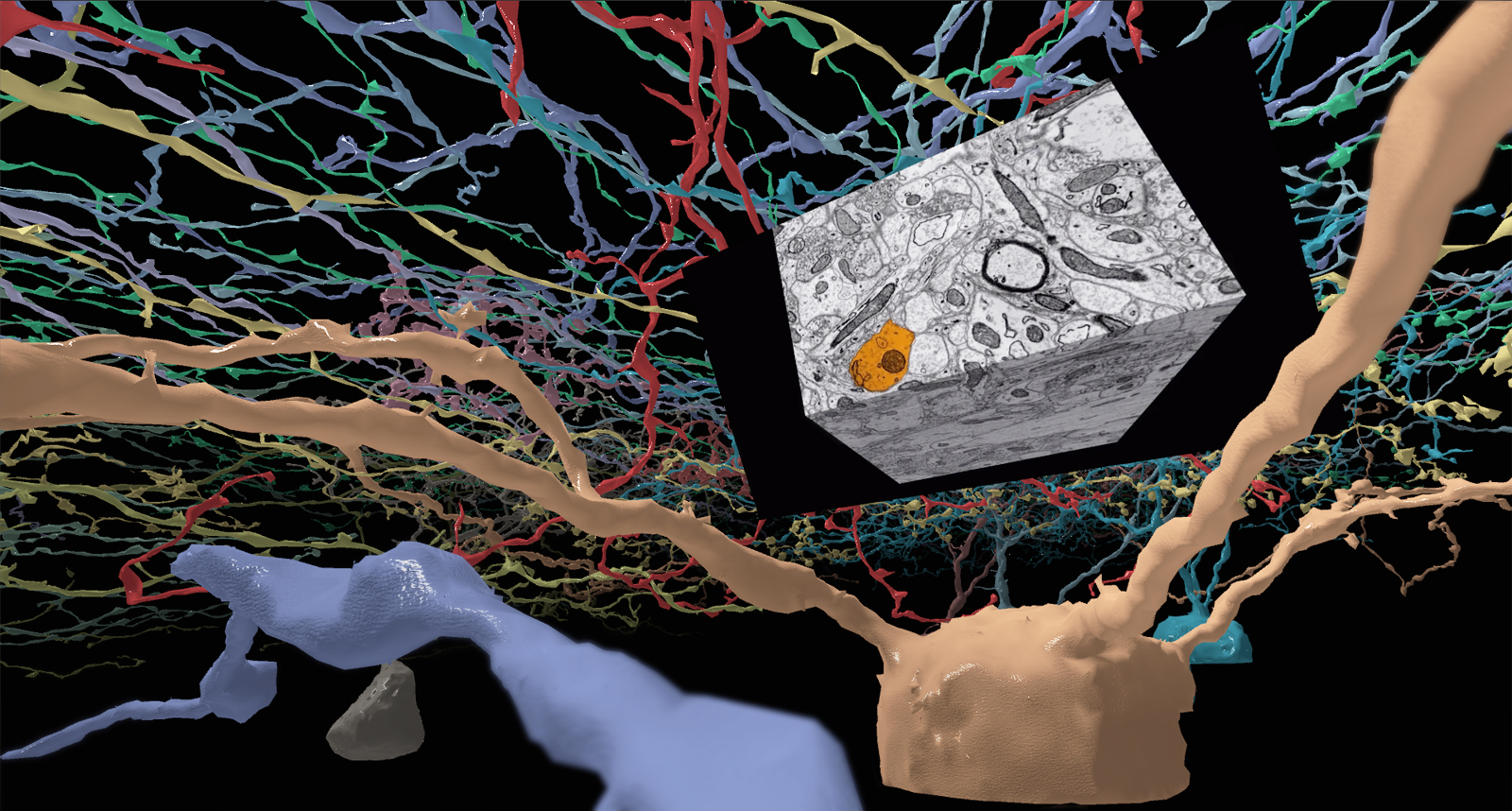

We used OpenVDB to create sparse volume representations of individual neurons. This library is an open source project led by DreamWorks Animation and used primarily in CG visual effects to represent voxel grids and level sets that change over time. It’s great for rendering amorphous effects like clouds, water, smoke, and dust. It’s also really well-suited for storing voxel representations of long, spindly structures like neurons.

We created a C++ utility that searches through the source data for sets of voxels with the same index and adds them to an OpenVDB grid as they are located. As an intermediate step, we saved out individual neurons as .vdb files, which is an efficient way to store complete voxel structures without losing any information. More importantly, the structures are stored in their original coordinate system, which means that the neurons can later be loaded all together without messing up their relative offsets. The following image shows what’s stored in the .vdb file after we isolated one neuron from the source data.

Unlike the source data, only the orange grid locations are stored in the file, saving a massive amount of memory in comparison with the full grid. The cell shown here is made up of about 43 million voxels and was saved to disk as a 30MB .vdb file.

Converting to polygonal mesh

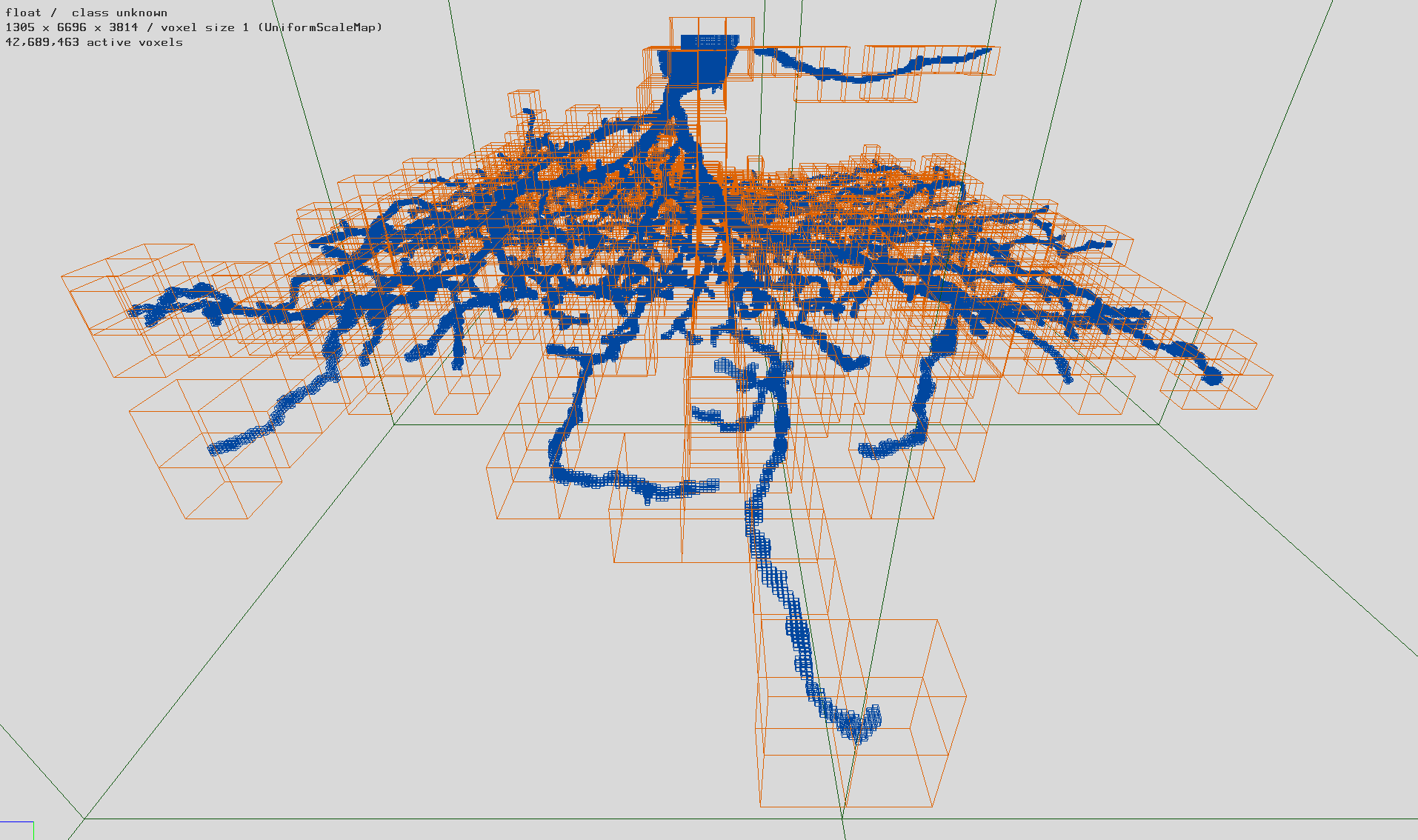

The OpenVDB library also includes methods to convert sparse voxel grids into polygonal meshes. The meshing process can be tuned in order to control the total number of polygons in the output meshes. We selected meshing parameters to ensure that all 16 neurons could be displayed simultaneously by our real-time engine (more on that later) while at the same time avoiding oversimplification. Simplifying a mesh too aggressively can result in distracting visual artifacts and large gaps in the model surface.

Since this experience was designed to be run on a typical VR gaming PC, we could generate relatively complex meshes. The 16 neurons in the experience add up to about 3 million polygons total. We could have pushed this further with various optimizations like level-of-detail (LOD) meshes, but will have to save that for the next version. Unity also supports GPU mesh tessellation which did make the models look gorgeous up close, but we found this compromised the frame rate (which is critical to maintain for a good VR experience).

Rendering the meshes

We encoded the colors for each neuron in the mesh vertices so that we could use a single material for all of them. We also used a technique called tri-planar texture mapping to add a normal map without using UV coordinates, giving the models a shiny/bumpy look up close.

The VR experience

More than any other interactive platform, VR content must be carefully crafted to avoid causing nausea and eye-strain in viewers. The list of VR Best Practices is long. Here and here are good places to start.

In developing Neuron Safari, we made the following design choices:

- Slow pacing—We restricted the speed of the experience to a relatively slow drift. We did this to ensure that the demo was comfortable to a wide range of viewers. It also gives the experience an almost meditative quality.

- Steady speed—Acceleration is known to induce nausea, so we maintain a steady speed.

- Motion is predominately in the forward direction—VR users tolerate moving forward much better than sideways or backward.

- No changing the camera orientation! For me, this is the biggest cause of nausea in VR. By artificially turning the camera you immediately create a disconnect between the user’s head turning control and the orientation of their view. When possible, let the user maintain full agency over camera direction.

- 75 frames per second, no exceptions—Our goal was to design the application to maintain the native framerate on DK2 at all times, even on midrange hardware. Relatively simple shading, no dynamic shadows, and careful control over the polygon budget all contributed to this. Nothing breaks immersion and causes users to tear off the headset faster than missing this frame rate target.

- Gaze-based navigation—We don’t require a game controller or keyboard input to navigate (although you do you have the option to use them). Turning happens gradually in response to where the viewer is looking. In this way, the viewer can stare at a certain object in the environment and they will naturally turn to fly toward it. To put it another way, your virtual ship turns to meet your gaze, not the other way around.

- Free exploration—The user isn’t put “on rails” and can fly anywhere in the 3D space, even out into the black, lonely void.

- Fixed world up-vector— This provides a consistent frame of reference. Our testing showed that truly free 3D navigation, where the up-vector is free to change, is more disorienting and nausea-inducing. In this way the experience is more like a flight simulator than a space simulator. The return to the world up-vector happens gradually over time, proportional to velocity (only happens when in motion) and direction (we only “right the ship” when flying perpendicular to the world up vector). These two features makes the user’s point of view slowly return to the world up vector in a gradual and fairly natural way.

- Conservative near clipping—Structures that appear too close to the camera can induce eyestrain and nausea. Notice that as you fly through the field of neurons, they clip away before coming too close. There is a trade-off here with immersion, since objects don’t behave this way in reality, but we determined it was necessary for this experience to work.

- Tasteful Ambient Music—The track we selected was “Among the Stars” by Ben Beiny, which had the right emotional character and tempo.

- We scattered flashing “content points” at various points in the environment, much like a scavenger hunt. Content points trigger the appearance of a virtual billboard that shows either image or video content. When the user activates a content point, they are moved/turned into position to view the billboard. This movement centers about the flashing point, so the user can maintain focus on the point during the animation (which helps avoid nausea). This concept borrows from the ballet world, where dancers use a technique called “spotting” to prevent dizziness while spinning by focusing their gaze on an anchor point.

Coverage and reviews

Indicated was invited to share some of our work in interactive scientific visualization at the NIH Science in 3D Conference in January, 2015. We debuted Neuron Safari there and had a positive response from the attendees who tried the demo.

TechCrunch—EyeWire Is Making Neuroscience Research Cool Again

Kevin Raposo, a journalist for TechCrunch, had an opportunity to try out Neuron Safari and wrote: “I was blown away. As soon as I strapped myself into ‘the rift,’ I was immersed in an environment that was filled with highways of neurons sprawled out in every direction. It was intense…This was one of the coolest experiences I’ve ever had with the Oculus Rift.”

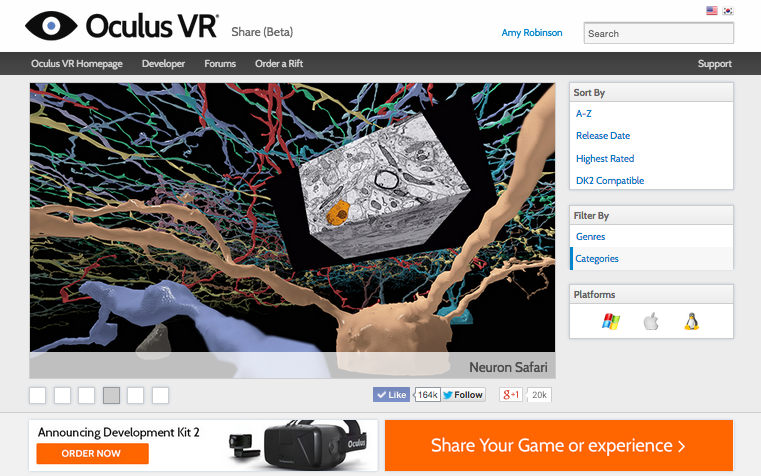

Oculus Share featured Neuron Safari on the front page:

UK Rifter says, “This is beautiful, really amazing.” “Wow, there’s so much detail here…”

First Day Reviews says, “Smooth as butter…” “Definitely cool for education.”

A few of the neuron surface models we produced from Eyewire data were used in 3Scan’s Pieces of Mind exhibit that appeared at the San Francisco Exploratorium from January to March of this year.

What’s next?

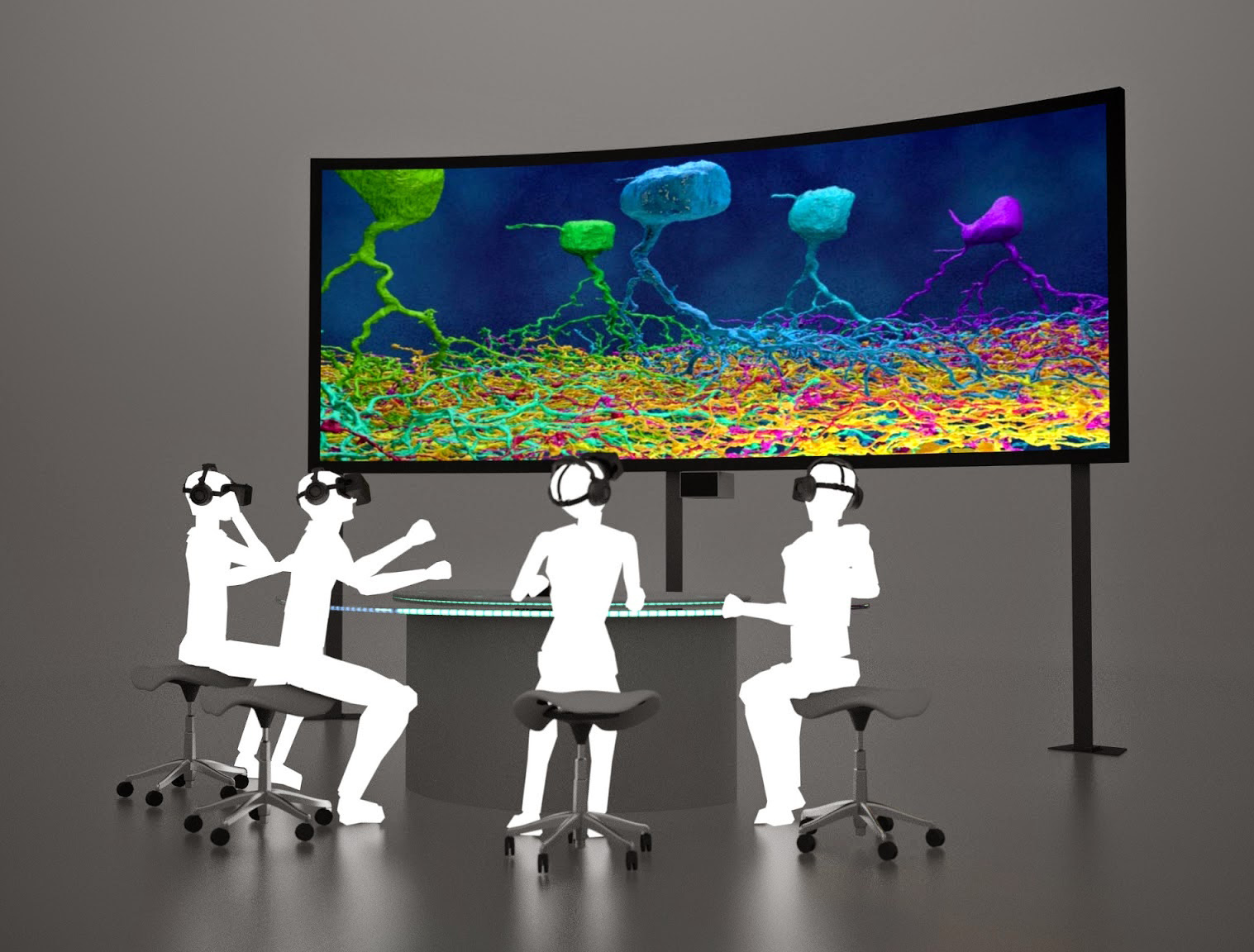

The future of VR is social and collaborative. The next iteration of Neuron Safari will bring multiple users together in a single virtual environment, allowing them to explore together and engage with content in a synchronized experience.

Indicated develops custom VR programs for healthcare clients, in association with Confideo Labs and DoctorVirtualis. Get in touch to learn more about how you can use VR in immersive training, marketing, and conference applications.